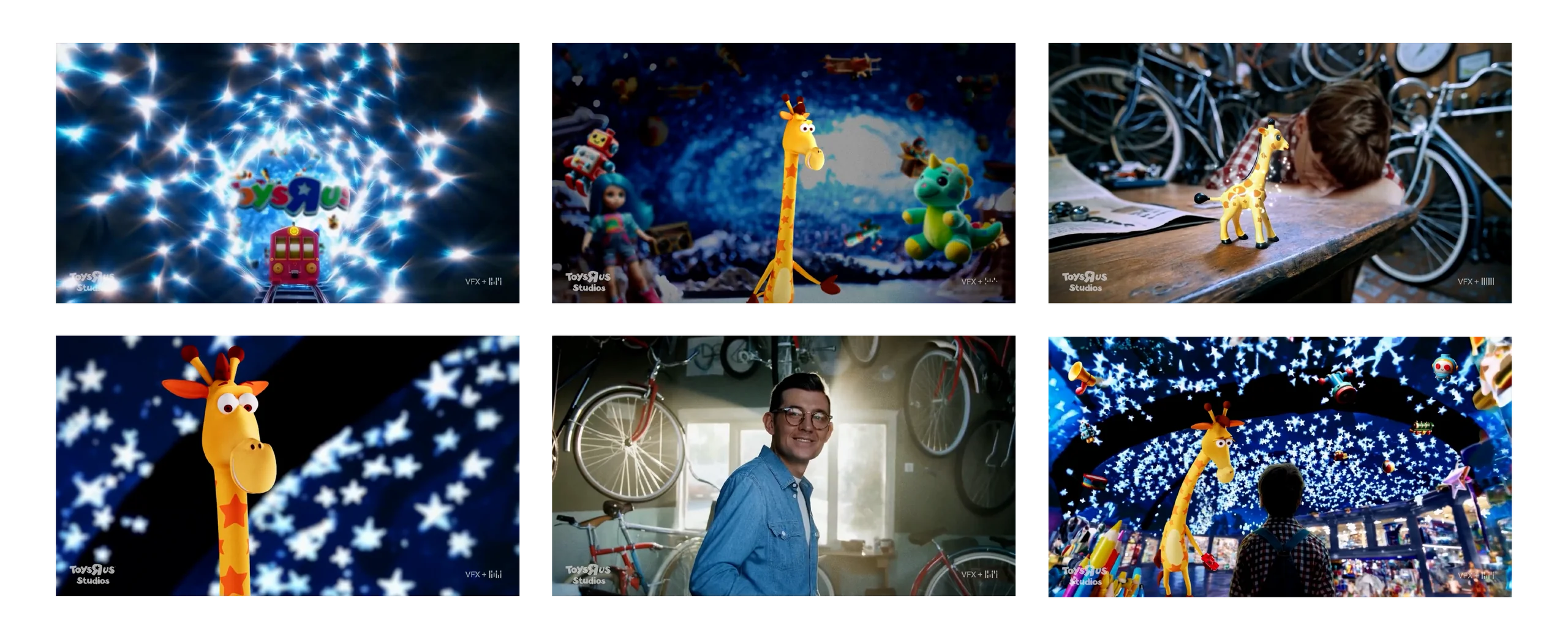

OpenAI’s Sora video engine is supposed to be the state of the art when it comes to creating things that look completely real. In an interesting move, the corpse of the company that was once Toys ’R’ Us (apparently they’re still in Macy’s stores under that brand) created an origin story for the brand featuring some weird stuff. Check it out here:

At first glimpse, the video is pretty good. A little kid grows up to be Charles Lazarus, founder of Toys ’R’ Us and he gets his inspiration from his bike mechanic father and enough peyote to send him careening through inner space with his toy giraffe.

The whole video is pretty good. I spotted a few AI artifacts but not many. For example, the bike they’re advertising in the window apparently allows two people to bike while facing each other:

Next, little Charles is apparently sleeping on nothing:

And finally apparently in Toys’R’Us town you can park anywhere you want, including the sidewalk:

In all, however, you see where we’re headed. This commercial probably took about five hours to “program” by twiddling with text prompts and another few hours to edit. That’s a single day. To do this same thing, at the same level of quality (without the jarring hallucinations) would take a few days at minimum with a staff of about twenty. Talent would need food and hotels, the camera and sound people would need to come in and get a day rate and the editors and directors would need to spend real time and money getting these shots. Heck, you’d have to pay Opie here a few thousand dollars and get him a tutor on set.

But none of this cost Toys ’R’ Us any money. They got Sora for free and all of the video — whether OpenAI will admit it or not — came from previously shot video. In short, Toys ’R’ Us made this video via an AI that trained on very precise b-roll that previously existed somewhere in the universe of content.

The bottom line is this: yes, companies can use this technology to make commercials. They can save millions on these commercials by using these tools. Thousands of people are out of work because of this technology, even if it’s only for this one gig. And the creative control any human director has over this project is limited at best. That they were able to get precise scenes featuring multiple characters is a testament to the prompting and engineering prowess of the OpenAI team and not attributable to the director’s skill.

So this is a piece of content, it works, and it’s just fine. But what happens when someone doesn’t take as much care as OpenAI did? What happens when there are loads more artifacts and the director waves her hand and says “pish-posh?” What happens when this is all we’re watching, all day long?

This commercial worked. The next one might not. And my concern is that without proper oversight, this becomes the most common way to make movies, television, and commercials.

It shouldn’t be.

Leave a Reply