Mastering AI Data Scraping for Text and Video: A Guide for Journalists by Pete Pachal

How to use AI scraping to extract data from websites and videos to power better journalism.

Read on Substack

This post originally appeared in The Media Copilot newsletter. Subscribe here.

Today we’re going to talk about the basics of working your beat. If there’s one constant in how media pros work, it’s the need to monitor what’s happening in their areas of coverage. The increasing number of outlets and the deluge of new information don’t make this easy, however.

Thankfully, there are dozens of tools and techniques you can use to stay current in your job, whether you’re covering the crime beat, monitoring news about your client, or hunting for fresh angles. Let’s dive in.

Some of the most immediately useful tools are AI news monitors. Sort of like souped-up Google News Alerts, tools like AskNews or a home-built GPT chatbot can scour online sources for news on whatever subject you want, summarize them, and even suggest new story angles.

AskNews is from Emergent Methods and bills itself as “an intelligent news assistant.” The company says it has access to more than 50,000 news sources in over 13 languages, letting you use natural language queries to drill down into news stories. It offers an API, allowing you to fold it into your own code. I was able, without too much trouble, to integrate it into a basic Python script that combined the AskNews API with OpenAI’s chat functionality to create a dedicated chatbot for the Ukraine war, a particular interest of mine.

Of course, you can also create a news assistant with OpenAI’s custom GPT function. For instance, I made one that, again, focuses on the Ukraine war. I have a daily prompt set up that asks the GPT to provide the day’s major news stories by scanning media sources in Russian, English, and Ukrainian, categorizing them as “Western Media,” “Russian Media,” or “Ukrainian Media,” and including the publication’s details. I’ve also made sure it translates the Russian and Ukrainian media into English and lets me know if the media source is state-controlled or independent. Here’s the full text:

“You are a world-class global news reporter, specializing in summarizing essential facts and providing unbiased analysis. Your role is to scan recent news articles about the war in Ukraine, drawing from a diverse group of sources including global media, local news sites, forum posts, and other sources, in multiple languages. Search reputable western, Russian and Ukrainian news sources and find the most important news story of the day. You will write a summary that quickly and concisely presents each story with 10-15 important takeaways. Provide comprehensive coverage. You prioritize the latest developments in fast-moving stories, providing both background information to give context and exploring the implications of events. You only use sources with the highest credibility, seek out sources in multiple languages based on the request, and translate all findings into English. You will provide a link to the source at the end of each story.”

These kinds of assistants are no substitute for staying on top of your beat by calling sources and reviewing documents, but they can give you a sense of what the general narrative is on any given day.

Subscribed

Need to know what public opinions are about topics on your beat? Sentiment analysis (SA) to the rescue. SA is a technique that uses NLP, textual analysis, and computational linguistics to determine the emotional tone of texts, usually on a positive-neutral-negative spectrum. Turn a large language model (LLM) loose on large amounts of — for example — social media posts, public comments from the federal register, or Reddit posts, and it can let you know if people are souring on a particular political candidate or getting excited about a sporting event. How do you use this on your beat?

SA can track changes in sentiment over time, discern viral or emerging topics, and gauge public reaction to news events. Another great use-case: Fact-checking public officials’ statements, such as “everybody” liking their policies. Do they really? SA can answer that question. It can also help journalists identify potential sources, and PR professionals find it vital to monitor how their clients are being talked about online.

Some of the better tools for sentiment analysis include standbys like Sprout Social and Hootsuite, which are great for media professionals. It works like this: search for the topic you’re covering, like SpaceX, for example. Then, look at the general sentiment, from positive to negative. You can generally see the trends over time, the spikes in traffic when your subject is in the news, and so on. If you’re tracking a particular subject and want to see what people are saying, tools like this are your answer.

For more simple tasks, journalists can also plug speeches or other text from officials into LLMs like ChatGPT or Claude.ai to get a basic sentiment analysis of how positive or negative the speech is.

In a more complex example, CNN, the University of Michigan, and Georgetown University used social media data from research firms SSRS and Verasight to analyze the sentiment of online chatter about both Donald Trump and Kamala Harris in the weeks leading up to the Sept. 10 debate.

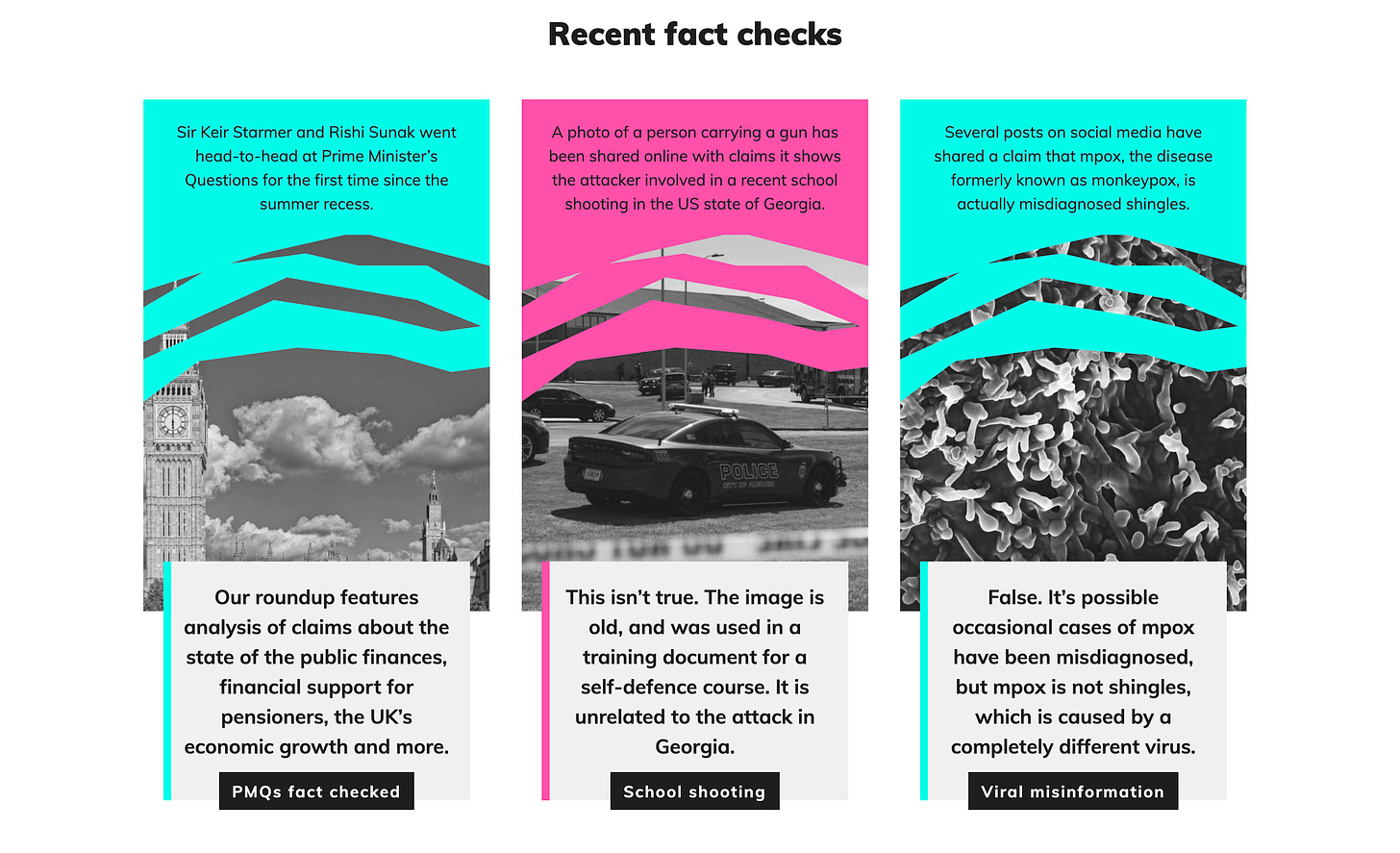

An important caveat for this section: Generative AI is too prone to hallucination to be a reliable, automated fact-checker. And, in journalism, most facts that need checking are “brand new information,” according to Andy Dudfield, the head of Full Fact, a UK non-profit that licenses its Full Fact AI tools for newsrooms and other information-focused organizations. Since the information to be checked is novel, it doesn’t exist in the training data of any LLMs, so they’re simply not equipped to evaluate whether it’s true or false. However, tools like Full Fact AI can identify which claims need to be checked and then hand that task off to human researchers who can track the data down and verify it.

When it comes to research, though, natural language processing tools are great for quickly parsing piles of PDFs, mountains of memos, or tons of transcripts. Google Pinpoint, specifically designed for journalists, lets you upload vast reams of documents, from old text files to audio files. It will parse, transcribe (with OCR for recognizing text in scanned documents), and extract every bit of information it can from your files. Then, it builds a knowledge graph of all the people, organizations, and locations mentioned in your documents. Working on a story about an allegedly corrupt city council member giving kickbacks to local business interests? Dump a bunch of city contracts in Pinpoint and then filter for the council member’s name.

A real-life example: Fábio Bispo, an investigative reporter for InfoAmazonia, used Pinpoint to scan thousands of meeting minutes detailing conversations between officials from large corporations and indigenous people in the Amazon. He found exploitive agreements and officials’ quotes that violated International Labour Organization standards.

For packrat reporters (redundant, I know), this can be a godsend for finding those overlooked nuggets in a vast trove of documents.

Of course, it’s imperative to use these tools responsibly. Journalists and media professionals should always verify AI-generated information via traditional reporting methods and human sources. These tools are a starting point, not a shortcut. They should also be transparent, always, when using such tools and check them regularly for any bias that may creep in.

This is not an exhaustive list, so if you’ve got other tools and techniques you’d like to share, please add them in the comments.

Bias in our society always seems to be on the rise: bias in our institutions, bias in our media, bias in AI. There doesn’t seem to be much we can do about it, or if we even want to. But few would argue against increasing awareness of it, and AI may be able to help with that.

More on that in a minute. Lots going on this week with respect to the media and AI, in particular the breaking news from Axios that several publications — including the Financial Times, the Atlantic and Fortune — have signed deals with ProRata.ai, whose platform is meant to enable, “fair compensation and credit for content owners in the age of AI.”

I’ll have more to say about that and other recent headlines in Thursday’s newsletter, but for now, I’ve got a couple of quick updates: This week, I was excited to be a guest on the most recent episode of the Better Radio Websites podcast. I explored with host Jim Sherwood how AI tools can empower a small team to do the work of a bigger one, but also the importance of adopting the right thinking about AI before you even start. Along the way, I also pick winners in the whole ChatGPT-Claude forever war, so it might be worth a listen just for that.

Speaking of podcasts, my recent interview with Perplexity’s Dmitry Shevelenko has officially become The Media Copilot’s most successful podcast to date. If you missed it, you can check it out right here on Substack, on our YouTube channel, or wherever you find podcasts.

Finally, don’t forget that the next cohorts for The Media Copilot’s AI training classes begin soon. AI Quick Start, our 1-hour basics class, is happening Aug. 22, and AI Fundamentals for Marketers, Media, and PR arrives on Sept. 4. This being summer, it’s the perfect time to upskill yourself with AI tools specific to your work so you can hit the ground running on cybernetic legs in September. Reserve your spot today, and don’t forget the discount code AIHEAT for 50% off at checkout.

One more thing, then let’s dive in.

Keep Your SSN Off The Dark Web

Every day, data brokers profit from your sensitive info — phone number, DOB, SSN — selling it to the highest bidder. And who’s buying it? Best case: companies target you with ads. Worst case: scammers and identity thieves.

It’s time you check out Incogni. It scrubs your personal data from the web, confronting the world’s data brokers on your behalf. And unlike other services, Incogni helps remove your sensitive information from all broker types, including those tricky People Search Sites.

Help protect yourself from identity theft, spam calls, and health insurers raising your rates. Plus, just for The Media Copilot readers: Get 55% off Incogni using code COPILOT.

The problem of media bias is a particularly vexing one. While the idea of a fully objective media, with zero bias, is obviously a fantasy, our current environment often feels like it’s embraced the other extreme, with a seemingly endless supply of slanted stories that present facts through thick ideological lenses, both left and right.

One could make the case that we asked for this — that the tangled incentives of media (and social media), where outrage and engagement drive clicks — led us to a place where many, if not most media brands today have some kind of reputation of bias. But it’s harder to make the case that this is somehow good for the average news consumer. Decoding bias involves considering how a particular story supports some kind of narrative, whether the language used aligns with a point of view, the reputation of the publication, and sometimes the record of the individual author.

Most would agree that process can be exhausting. But it also sounds like something an algorithm could do. That appears to be the thinking behind this AI-powered bias checker, unveiled on Tuesday by AllSides, a website that analyzes bias in media. If you suspect an article you read is slanted to favor either the left or the right, you can paste the URL into the tool, and it’ll tell you which way it leans and how much. It’ll also give you a helpful summary of the precise signals it used to arrive at its conclusion.

Is this helpful? As a tool for readers, it may have some utility. But anyone deliberately using it is probably already pretty media-savvy, so AllSides’ bias checker has a few steps to go before it puts a dent in the overall problem. A logical next step would be to create a Chrome extension. After installation, your browser could alert you to bias automatically — on any article page you happen to land on. Maybe from there certain browsers eventually start to include the feature as an opt-in setting.

But the real value in this exercise today is to show that AI is actually quite good at this. Analyzing text, detecting patterns within it (some potentially subtle), and then producing an overall assessment against a set of rules — that’s at the core of what large language models (LLMs) do.

Of course, it matters who is creating that set of rules. AllSides, which describes itself as “a public benefit corporation,” has been analyzing and rating the bias of media sites since 2012. You might quibble with any specific rating, but its media bias chart that maps where the top publications (and their opinion sections) land on a continuum of right to left looks generally accurate.

This isn’t the first time someone has thought to use AI to uncover media bias. A similar enterprise was announced last fall between Seekr, a company that aspires to create “trustworthy” AI, and the now-defunct publication The Messenger. That effort intended to show the bias in articles, but it didn’t get off the ground before The Messenger’s now-infamous flameout. Still, it was an arguably brave move to turn a bias checker on itself, one suited to a young publication trying to make a name for itself.

For established media, there’s probably less of a desire to highlight their own biases, either because they’re already explicit, or at least generally assumed. That’s why a media bias checker likely won’t gain traction as an idea: There may be general agreement that bias exists at a publication, but there isn’t agreement on whether that’s a bad thing.

But let’s run with the idea. If, in theory, a publication wanted to eliminate bias in its news or storytelling, the place to integrate a bias checker isn’t at the reader level — it should be part of the news production process. Once a story is written and uploaded into the CMS, it could run an automatic bias check. If the story falls outside of a certain range, it would be kicked back to the reporter and editor, probably with suggestions from the LLM to make it more balanced. For opinion, where bias is encouraged, the checker could simply count the number of pieces that lean one way vs. the other. If the count skews too far in one direction, it would alert the opinion editor to commission more pieces from the other side.

This sounds straightforward in theory, but it would be extremely thorny in practice. Publications interested in implementing a bias checker might face resistance from their staff, who may not be comfortable with an LLM giving them editorial feedback. Moreover, many staffers may not see any problem with a publication having an overall bias. And they might be right — telling an audience what they want to hear is arguably a reliable editorial strategy, whether the publication is honest about their slant or not, at least in today’s click-driven ecosystem.

Still, AI’s power to analyze language brings a new tool that may shine some light onto the thorny issue of bias in the media. It’ll take more than a single bias checker to untangle the problem, or even make clear that we should want to. But anything that might be a step towards more trust in media — currently historically low and getting lower — is probably worth a try.

This post originally appeared in The Media Copilot newsletter. Subscribe here.

How people will search the web with AI — and how content creators will get paid — looks a lot clearer today.

That’s because of two notable developments. First, OpenAI teased its long-in-the-works search product, SearchGPT. Labeled a “prototype” and only available to a select few testers (I, along with a few million friends, am on the waitlist), SearchGPT pairs a search engine with the power of AI to summarize answers — even about current events — not just serve up a list of links.

If that sounds a lot like what Perplexity does, you’re right. The company behind the so-called “answer engine” has some news of its own that might be even more influential in defining how AI search works going forward: the official unveiling of the Perplexity Publishers’ Program, which opens the door for advertising revenue on the service and establishes a system where Perplexity will share that revenue with publishers and content creators. The publishers also get free access to both Perplexity Pro as well as Perplexity’s APIs so they can create AI search bots for their own sites.

Taken together, the two announcements confirm AI-powered search is a market that’s highly competitive — not just for dollars but also for headlines contemplating who’s going to be the “next Google.” (It’s also doubtful the near-simultaneous timing of the announcements is a mere coincidence). And since they’re coming from two of the biggest players in AI, they point toward a new economy emerging around AI search, one that includes a key party: publishers of original, human-authored content.

Perplexity’s announcement comes just a few weeks after both Forbes and Wired publicly criticized the company for not attributing its answers properly as well as the quality of those answers. In the resulting firestorm, Perplexity revealed the existence of the partnership program. Now we have details, including confirmation that the fuel of the new AI search economy will be — wait for it — advertising. The key to the new program is revenue sharing: If a publisher’s content is used in any particular answer, it will get a cut of any ad revenue generated by that answer.

Initial partners include TIME, Der Spiegel, The Texas Tribune, and a few others. Automattic is also a launch partner, which is notable since it extends the option to share revenue to smaller publishers with WordPress.com domains (a small subset of sites that use WordPress, the blogging software that powers half the web). In an interview that The Media Copilot will publish later this week, Perplexity Chief Business Officer Dmitry Shevelenko told me Perplexity plans to scale the program and ultimately to make it “self-serve” for publishers.

The scalability of Perplexity’s approach is where it diverges from OpenAI’s. OpenAI has been signing deals with publishers left and right for months, but they’re all with major media companies, including News Corp, TIME, Dotdash Meredith, Axel Springer, Reuters, and several others. If you’re not of a certain size, you’re not on the radar. For smaller publishers, that doesn’t leave many options outside of blocking your content from crawlers, sue, or both.

There are, however, a handful of startups trying to create ways for smaller pubs to get paid by creating marketplaces where they can “sell” their content to AI engines. TollBit, for example, creates what amounts to a paywall for web scrapers — if they want to crawl your content, they have to pay up. Dappier, which recently announced $2 million in seed funding, is another solution.

Now Perplexity has entered the chat. It’s unclear if the partnership program would be compatible with toll-based payment solutions, and there’s a good chance that — in whatever economy emerges out of all this — publishers will need to implement both. With licensing revenue from the creators of foundational models, ad partnerships with AI search engines like Perplexity, and toll revenue from other AI scrapers, balance sheets at media companies might get pretty complicated in a few years.

Let’s be clear: That’s a good problem. Given that the media industry has been cratering over the past year due to referral traffic drying up and a lousy ad market, a picture of new future revenue is welcome — even a hazy and undeveloped one.

One player that’s been strangely absent from the new economy around AI search is Google. Of course, Google is pushing hard into its own AI-powered search feature, AI Overviews, but so far it has given no indication that it ever intends to create or participate in a new way to compensate publishers or content creators. The company has, in fact, shown open hostility to solutions that would require Google to pay for what appears in search results (to be fair, many of the proposed solutions involve the long arm of the law acting as an enforcement mechanism).

Given that Google has built a multi-trillion dollar company on being able to freely crawl the web to power search engines, you can see why it’s not in a hurry to upset that status quo. But that obstinacy here may be shortsighted. From its perch on top of the search market, Google may not see clearly that Perplexity, OpenAI, and others are beginning to define how the AI search economy will work. And they’re doing it by actually involving publishers rather than simply expecting them to surrender to a new reality.

Let’s not get deluded: The hill to climb to become the next Google is high — perhaps insurmountable. What seems to be clearer by the day, though, is whoever wins the future of search will need a system that doesn’t just provide the best answers, but also the means to encourage more high-quality information. And right now the energy around that idea is in companies that don’t start with G.

Bias in our society always seems to be on the rise: bias in our institutions, bias in our media, bias in AI. There doesn’t seem to be much we can do about it, or if we even want to. But few would argue against increasing awareness of it, and AI may be able to help with that.

More on that in a minute. Lots going on this week with respect to the media and AI, in particular the breaking news from Axios that several publications — including the Financial Times, the Atlantic and Fortune — have signed deals with ProRata.ai, whose platform is meant to enable, “fair compensation and credit for content owners in the age of AI.”

I’ll have more to say about that and other recent headlines in Thursday’s newsletter, but for now, I’ve got a couple of quick updates: This week, I was excited to be a guest on the most recent episode of the Better Radio Websites podcast. I explored with host Jim Sherwood how AI tools can empower a small team to do the work of a bigger one, but also the importance of adopting the right thinking about AI before you even start. Along the way, I also pick winners in the whole ChatGPT-Claude forever war, so it might be worth a listen just for that.

Speaking of podcasts, my recent interview with Perplexity’s Dmitry Shevelenko has officially become The Media Copilot’s most successful podcast to date. If you missed it, you can check it out right here on Substack, on our YouTube channel, or wherever you find podcasts.

Finally, don’t forget that the next cohorts for The Media Copilot’s AI training classes begin soon. AI Quick Start, our 1-hour basics class, is happening Aug. 22, and AI Fundamentals for Marketers, Media, and PR arrives on Sept. 4. This being summer, it’s the perfect time to upskill yourself with AI tools specific to your work so you can hit the ground running on cybernetic legs in September. Reserve your spot today, and don’t forget the discount code AIHEAT for 50% off at checkout.

One more thing, then let’s dive in.

Keep Your SSN Off The Dark Web

Every day, data brokers profit from your sensitive info — phone number, DOB, SSN — selling it to the highest bidder. And who’s buying it? Best case: companies target you with ads. Worst case: scammers and identity thieves.

It’s time you check out Incogni. It scrubs your personal data from the web, confronting the world’s data brokers on your behalf. And unlike other services, Incogni helps remove your sensitive information from all broker types, including those tricky People Search Sites.

Help protect yourself from identity theft, spam calls, and health insurers raising your rates. Plus, just for The Media Copilot readers: Get 55% off Incogni using code COPILOT.

The problem of media bias is a particularly vexing one. While the idea of a fully objective media, with zero bias, is obviously a fantasy, our current environment often feels like it’s embraced the other extreme, with a seemingly endless supply of slanted stories that present facts through thick ideological lenses, both left and right.

One could make the case that we asked for this — that the tangled incentives of media (and social media), where outrage and engagement drive clicks — led us to a place where many, if not most media brands today have some kind of reputation of bias. But it’s harder to make the case that this is somehow good for the average news consumer. Decoding bias involves considering how a particular story supports some kind of narrative, whether the language used aligns with a point of view, the reputation of the publication, and sometimes the record of the individual author.

Most would agree that process can be exhausting. But it also sounds like something an algorithm could do. That appears to be the thinking behind this AI-powered bias checker, unveiled on Tuesday by AllSides, a website that analyzes bias in media. If you suspect an article you read is slanted to favor either the left or the right, you can paste the URL into the tool, and it’ll tell you which way it leans and how much. It’ll also give you a helpful summary of the precise signals it used to arrive at its conclusion.

Is this helpful? As a tool for readers, it may have some utility. But anyone deliberately using it is probably already pretty media-savvy, so AllSides’ bias checker has a few steps to go before it puts a dent in the overall problem. A logical next step would be to create a Chrome extension. After installation, your browser could alert you to bias automatically — on any article page you happen to land on. Maybe from there certain browsers eventually start to include the feature as an opt-in setting.

But the real value in this exercise today is to show that AI is actually quite good at this. Analyzing text, detecting patterns within it (some potentially subtle), and then producing an overall assessment against a set of rules — that’s at the core of what large language models (LLMs) do.

Of course, it matters who is creating that set of rules. AllSides, which describes itself as “a public benefit corporation,” has been analyzing and rating the bias of media sites since 2012. You might quibble with any specific rating, but its media bias chart that maps where the top publications (and their opinion sections) land on a continuum of right to left looks generally accurate.

This isn’t the first time someone has thought to use AI to uncover media bias. A similar enterprise was announced last fall between Seekr, a company that aspires to create “trustworthy” AI, and the now-defunct publication The Messenger. That effort intended to show the bias in articles, but it didn’t get off the ground before The Messenger’s now-infamous flameout. Still, it was an arguably brave move to turn a bias checker on itself, one suited to a young publication trying to make a name for itself.

For established media, there’s probably less of a desire to highlight their own biases, either because they’re already explicit, or at least generally assumed. That’s why a media bias checker likely won’t gain traction as an idea: There may be general agreement that bias exists at a publication, but there isn’t agreement on whether that’s a bad thing.

But let’s run with the idea. If, in theory, a publication wanted to eliminate bias in its news or storytelling, the place to integrate a bias checker isn’t at the reader level — it should be part of the news production process. Once a story is written and uploaded into the CMS, it could run an automatic bias check. If the story falls outside of a certain range, it would be kicked back to the reporter and editor, probably with suggestions from the LLM to make it more balanced. For opinion, where bias is encouraged, the checker could simply count the number of pieces that lean one way vs. the other. If the count skews too far in one direction, it would alert the opinion editor to commission more pieces from the other side.

This sounds straightforward in theory, but it would be extremely thorny in practice. Publications interested in implementing a bias checker might face resistance from their staff, who may not be comfortable with an LLM giving them editorial feedback. Moreover, many staffers may not see any problem with a publication having an overall bias. And they might be right — telling an audience what they want to hear is arguably a reliable editorial strategy, whether the publication is honest about their slant or not, at least in today’s click-driven ecosystem.

Still, AI’s power to analyze language brings a new tool that may shine some light onto the thorny issue of bias in the media. It’ll take more than a single bias checker to untangle the problem, or even make clear that we should want to. But anything that might be a step towards more trust in media — currently historically low and getting lower — is probably worth a try.

Bias in our society always seems to be on the rise: bias in our institutions, bias in our media, bias in AI. There doesn’t seem to be much we can do about it, or if we even want to. But few would argue against increasing awareness of it, and AI may be able to help with that.

More on that in a minute. Lots going on this week with respect to the media and AI, in particular the breaking news from Axios that several publications — including the Financial Times, the Atlantic and Fortune — have signed deals with ProRata.ai, whose platform is meant to enable, “fair compensation and credit for content owners in the age of AI.”

I’ll have more to say about that and other recent headlines in Thursday’s newsletter, but for now, I’ve got a couple of quick updates: This week, I was excited to be a guest on the most recent episode of the Better Radio Websites podcast. I explored with host Jim Sherwood how AI tools can empower a small team to do the work of a bigger one, but also the importance of adopting the right thinking about AI before you even start. Along the way, I also pick winners in the whole ChatGPT-Claude forever war, so it might be worth a listen just for that.

Speaking of podcasts, my recent interview with Perplexity’s Dmitry Shevelenko has officially become The Media Copilot’s most successful podcast to date. If you missed it, you can check it out right here on Substack, on our YouTube channel, or wherever you find podcasts.

Finally, don’t forget that the next cohorts for The Media Copilot’s AI training classes begin soon. AI Quick Start, our 1-hour basics class, is happening Aug. 22, and AI Fundamentals for Marketers, Media, and PR arrives on Sept. 4. This being summer, it’s the perfect time to upskill yourself with AI tools specific to your work so you can hit the ground running on cybernetic legs in September. Reserve your spot today, and don’t forget the discount code AIHEAT for 50% off at checkout.

One more thing, then let’s dive in.

Keep Your SSN Off The Dark Web

Every day, data brokers profit from your sensitive info — phone number, DOB, SSN — selling it to the highest bidder. And who’s buying it? Best case: companies target you with ads. Worst case: scammers and identity thieves.

It’s time you check out Incogni. It scrubs your personal data from the web, confronting the world’s data brokers on your behalf. And unlike other services, Incogni helps remove your sensitive information from all broker types, including those tricky People Search Sites.

Help protect yourself from identity theft, spam calls, and health insurers raising your rates. Plus, just for The Media Copilot readers: Get 55% off Incogni using code COPILOT.

The problem of media bias is a particularly vexing one. While the idea of a fully objective media, with zero bias, is obviously a fantasy, our current environment often feels like it’s embraced the other extreme, with a seemingly endless supply of slanted stories that present facts through thick ideological lenses, both left and right.

One could make the case that we asked for this — that the tangled incentives of media (and social media), where outrage and engagement drive clicks — led us to a place where many, if not most media brands today have some kind of reputation of bias. But it’s harder to make the case that this is somehow good for the average news consumer. Decoding bias involves considering how a particular story supports some kind of narrative, whether the language used aligns with a point of view, the reputation of the publication, and sometimes the record of the individual author.

Most would agree that process can be exhausting. But it also sounds like something an algorithm could do. That appears to be the thinking behind this AI-powered bias checker, unveiled on Tuesday by AllSides, a website that analyzes bias in media. If you suspect an article you read is slanted to favor either the left or the right, you can paste the URL into the tool, and it’ll tell you which way it leans and how much. It’ll also give you a helpful summary of the precise signals it used to arrive at its conclusion.

Is this helpful? As a tool for readers, it may have some utility. But anyone deliberately using it is probably already pretty media-savvy, so AllSides’ bias checker has a few steps to go before it puts a dent in the overall problem. A logical next step would be to create a Chrome extension. After installation, your browser could alert you to bias automatically — on any article page you happen to land on. Maybe from there certain browsers eventually start to include the feature as an opt-in setting.

But the real value in this exercise today is to show that AI is actually quite good at this. Analyzing text, detecting patterns within it (some potentially subtle), and then producing an overall assessment against a set of rules — that’s at the core of what large language models (LLMs) do.

Of course, it matters who is creating that set of rules. AllSides, which describes itself as “a public benefit corporation,” has been analyzing and rating the bias of media sites since 2012. You might quibble with any specific rating, but its media bias chart that maps where the top publications (and their opinion sections) land on a continuum of right to left looks generally accurate.

This isn’t the first time someone has thought to use AI to uncover media bias. A similar enterprise was announced last fall between Seekr, a company that aspires to create “trustworthy” AI, and the now-defunct publication The Messenger. That effort intended to show the bias in articles, but it didn’t get off the ground before The Messenger’s now-infamous flameout. Still, it was an arguably brave move to turn a bias checker on itself, one suited to a young publication trying to make a name for itself.

For established media, there’s probably less of a desire to highlight their own biases, either because they’re already explicit, or at least generally assumed. That’s why a media bias checker likely won’t gain traction as an idea: There may be general agreement that bias exists at a publication, but there isn’t agreement on whether that’s a bad thing.

But let’s run with the idea. If, in theory, a publication wanted to eliminate bias in its news or storytelling, the place to integrate a bias checker isn’t at the reader level — it should be part of the news production process. Once a story is written and uploaded into the CMS, it could run an automatic bias check. If the story falls outside of a certain range, it would be kicked back to the reporter and editor, probably with suggestions from the LLM to make it more balanced. For opinion, where bias is encouraged, the checker could simply count the number of pieces that lean one way vs. the other. If the count skews too far in one direction, it would alert the opinion editor to commission more pieces from the other side.

This sounds straightforward in theory, but it would be extremely thorny in practice. Publications interested in implementing a bias checker might face resistance from their staff, who may not be comfortable with an LLM giving them editorial feedback. Moreover, many staffers may not see any problem with a publication having an overall bias. And they might be right — telling an audience what they want to hear is arguably a reliable editorial strategy, whether the publication is honest about their slant or not, at least in today’s click-driven ecosystem.

Still, AI’s power to analyze language brings a new tool that may shine some light onto the thorny issue of bias in the media. It’ll take more than a single bias checker to untangle the problem, or even make clear that we should want to. But anything that might be a step towards more trust in media — currently historically low and getting lower — is probably worth a try.